Table of Contents

It’s highly likely that you’ve never had to ask yourself the question: “How do I test a virtual keyboard?”

It’s understandable that most people don’t think about it, as virtual keyboards are an intrinsic part of a mobile operating system. It almost becomes an extension of your hand – like a symbiosis between your brain and the electronic device!

But when the question does arise – usually when you need to test a customized virtual keyboard for an app or platform, it can be difficult to know where to start.

How do you test a virtual keyboard?

The quick and simple answer would be – open up any application that allows you to write text and press each letter with your finger to form words, right?

Unfortunately, it’s not that simple. It can even become impossible to test in some cases.

For those of us who often work with a mobile device, the use of the virtual keyboard becomes second nature and we just press the keys without even thinking about what we are doing. One important thing we overlook is whether we type the words correctly because the autocorrect comes to our rescue. The autocorrect feature is our savior (or occasionally turns against us when it adds a word that does not fit with what we were thinking…).

As you will see in just a moment, a keyboard is not just a bunch of letters arranged as if someone had scattered Scrabble tiles over the table! A keyboard has many layers behind it, many configurations, and many diversifications that need to be tested to ensure that it fulfills its main function: accurate and clear communication.

Before we take a look at keyboard testing in detail, let’s first define what we mean by ‘testing’. Virtual keyboard testing involves verifying that the keyboard works as expected and checking the overall usability, functionality, stability, etc. A keyboard that works well but is not usable is pointless.

Test types

Fleksy keyboard undergoes a variety of tests to ensure that the keyboard works well, is fully usable, highly compatible and stable. Here are some of the test involved:

- Unit tests

- Integration tests

- User interface tests

- Functional tests

- Compatibility tests

- Usability tests

- Performance tests

- Security tests

- Data capture tests

The above tests may be manual or automated and can be executed on real devices or simulators.

Each type of test has a scope and an objective. Not all types of testing need to run all the time, nor are they mutually exclusive. The tests complement each other and together they help to give you a more objective view of the keyboard quality.

By testing the keyboard, the quality is measured. Quality is not subjective and you should always be able to evaluate the quality of a product, i.e. not only knowing if something fails, but also knowing what aspects can be improved further.

So how do we measure the quality of a keyboard? The main criteria is deciding whether each of its features or layers works correctly.

Keyboard layers

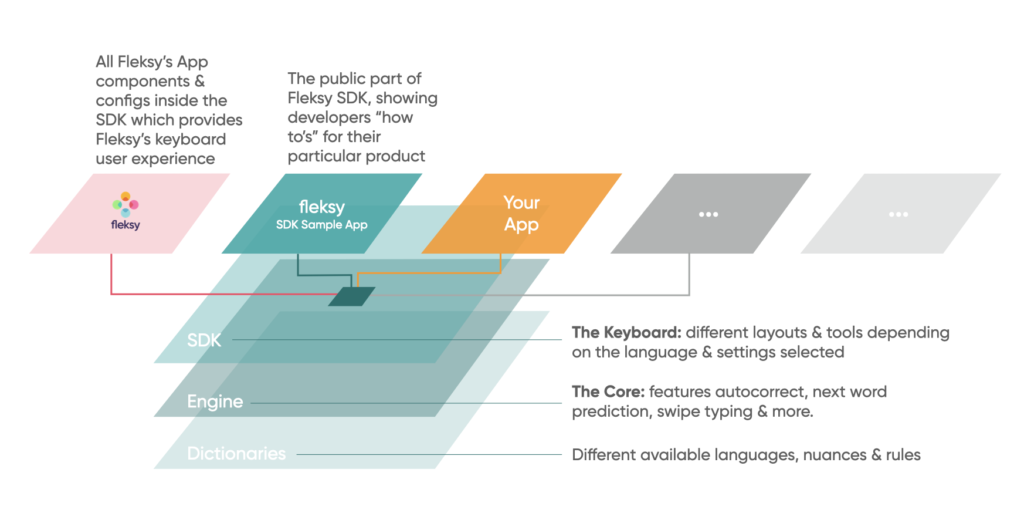

The Fleksy keyboard is made up of the following layers:

- Engine: The core of the keyboard that features autocorrect, next word prediction, etc.

- Dictionaries: Stores all the different available languages and their specific nuances and rules

- SDK: The pieces of code that communicate between the application and the software engine that manages the different keyboard configurations

- Sample app: The visible part of the SDK that allows you to change settings and test keyboard settings

- Fleksy Keyboard: The actual keyboard itself, featuring different layouts and tools depending on the language and settings selected.

Testing processes

In order to check all the layers listed above, a variety of testing processes are required. Some tests will be manual and others automated using different techniques, programming languages and frameworks. The settings depend on which test is being performed and the platform it’s being carried out on.

In Fleksy the following testing processes (or testing strategy) are carried out:

- Identify the functionality to be developed

- Investigate and document the functionality to be developed

- Validate the functionality to be developed

- Define the necessary tests to cover all the layers involved

- Develop the functionality

- Create unit tests related to the functionality developed

- Create UI tests that are possible to execute

- Create end-to-end tests that verify critical functionalities

- Prepare the continuous integration system for this new development

- Verify that the application compiles and the automated tests are executed

- Perform a manual review of the application if necessary

Pre-development process

Before creating the code for a new functionality, a previous investigation is required, either using mockups or virtualizing the functionality to show it to users before proceeding. Alternatively, you can document development initiatives, which must be verified and validated by the interested parties (stakeholders). In this way, it is possible to discard ideas that are not going to be useful or are unsuitable for development.

Once the development has been approved, Quality Assurance (“QA”) can define the acceptance criteria necessary to cover each of the functionalities, usually writing high-level tests in Gherkin language, such as:

Case 1: User taps the spacebar twice to insert a period automatically

Given a user that has the setting “Double space period” activated

When she types a word and taps the spacebar twice,

Then a period and blank space will automatically be typed

And the next word will start in uppercase.

In this way, both developers and QA can be guided by the acceptance criteria to develop the functionality and verify it.

All this is a part of testing and it is one of the most important stages to prevent cost overruns. If problems are caught early enough by rigorous testing, then you can avoid large expenses to fix things later on.

Development process

The developer of each platform must generate the code in accordance with all the previous documentation and cover this functionality with different unit tests (UT) and/or integration tests (IT). In parallel, QA can prepare, if necessary, end-to-end tests to further cover the testing performed on this functionality.

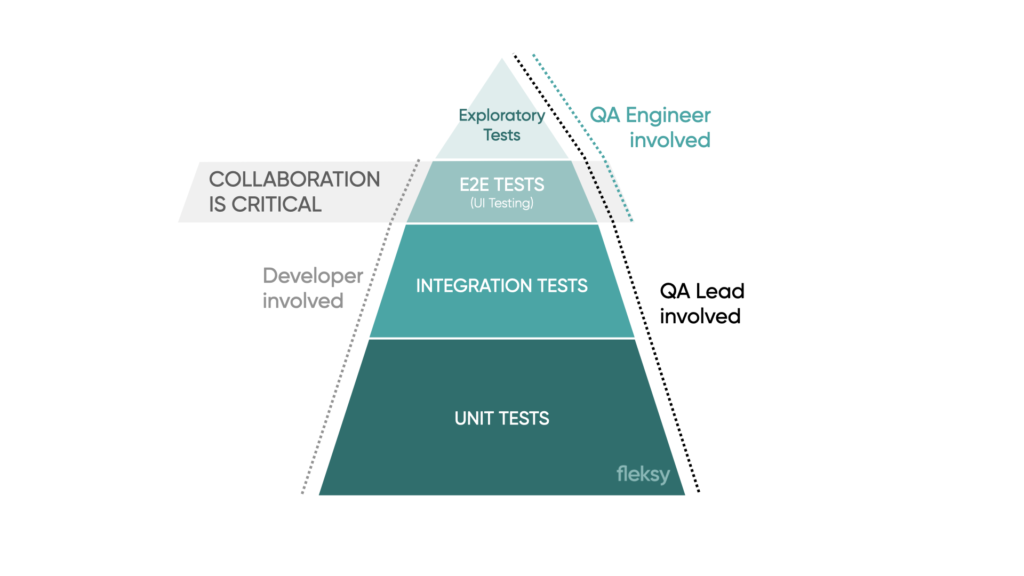

End-to-end tests are usually much slower than UT and IT tests, so it is recommended to follow Kohn’s pyramid to reduce time (and costs):

Where the vast majority must be unitary and integration tests, and the minority end-to-end tests.

Here, both developers and QA need to be aware of the test coverage so as not to duplicate them. They can even help each other, by passing the raw keyboard layout data to QA for instance, which allows them to automate keystrokes tapping on the coordinates where the keys are displayed.

All of these tests will be executed automatically in a continuous integration system.

The Continuous Integration System (“CIS”)

At Fleksy, part of the testing is done automatically and autonomously through a continuous integration system using Bitrise services. This makes all application versions available at any time and ensures that they meet the necessary requirements for use.

Let’s take the example of the pipeline used for the Fleksy SDK. Different workflows are configured which perform different actions:

- Create app versions

- Add the builds to the various distribution platforms

- Launch automated tests of various layers of the application

- Generate reports of test results.

Therefore, it is possible to decide when to run these builds (merging a branch to develop, creating a release branch, etc.), the type of application to build (a test version, an official version for stores, etc.) or the types of tests to run.

Once the versions are compiled, the following automated tests of the application are run on emulators and real mobiles using a mobile farm:

- Dictionaries: autocorrect tests, data capture tests and language model tests

- Keyboard Layout: keyboard areas and key layout verifications

- Keyboard Typing: basic typing verification with the keyboard

- Data Capture verification: for security or medical applications, for instance

- Settings: keyboard permission and usability checks of the different settings visible in the sample app.

Once the entire verification process is finished, reports of the results of the tests and the compilation are generated and our team is informed via Slack.

In the event that something fails during the process, a check is executed on the continuous integration system to see if:

- fails to recompile that version manually

- it is a real bug to block the version until it’s fixed,

- it can be left for later and then pass the version onto manual testing.

Manual testing

Once the new development has been verified with automated tests, it is possible (and recommended) to carry out different types of manual tests that are either very expensive or impossible to replicate with automated tests. Also tests that require human supervision like retina tests, fingerprint tests, usability tests, guess testing, etc.

At Fleksy, the following tests are done by the internal and outsourced QA team:

- Compatibility tests: verifying that everything works and displays correctly on different types, models and versions of devices

- Usability tests: checking usability and performance on different devices.

- Exploratory tests: free rein to test without following guided test cases

- Functional tests: following guided test cases.

Other tests performed

As mentioned earlier, the Fleksy keyboard is made up of different layers, apart from the SDK and its sample app, which are verified through automatic tests. A test such as our Engine test can be used. It verifies if the language model works correctly (auto-correction, next-word prediction, etc.) and if the Dictionaries test which verifies all available languages (more than 80) and their specificities work correctly.

Test documentation

To make sure that the testing is well documented, it’s good practice to have some processes implemented, such as:

- Release calendar: To prepare the test cases, coordinate with QA the execution of the manual tests and the reporting of results, etc.

- Documentation platform: For example, Confluence, Jira, a test case management tool, or even Excel, where it’s possible to inform the entire team of the tests carried out and their results.

- Test result reports: Each member of the team can be informed of the errors detected in the releases, criticality, status, etc. through graphs or concise data.

- Quality reports: Informing the entire team periodically (monthly and/or quarterly) of different data on the quality of the products, such as statistics on test coverage, the status of detected errors, improvement in lead time, etc.

Conclusion

Since the Fleksy SDK can be integrated into any mobile application or TV system, etc. The testing of the keyboard input method must be as exhaustive as possible. A number of different techniques are used to verify each of its layers.

The process of testing a virtual keyboard must adapt to continuous changes in platforms, new systems, and even new ways of writing.

It’s not a fixed process, you simply have to observe the evolution of technologies, anticipate what may be happening, and create new tests that cover these needs. It’s important not to stick to a single system, or a single programming language, because a joint effort of the entire team is essential at all times.